AI and What the Regime is Doing With It

A 10-year moratorium for states to enforce any law or regulation regarding AI leads to bipartisan opposition.

Recently a colleague sent an article to me that made me realize how involved the Federal Government has become in Artificial Intelligence (AI). I’ll come back to the article in a moment, but it has to do with the 10-year moratorium on enforcement of any regulations or laws on AI included in the Big Butt-ugly Bill.

Subsection (c) states that no state or political subdivision may enforce any law or regulation regulating artificial intelligence models, artificial intelligence systems, or automated decision systems during the 10-year period beginning on the date of the enactment of this Act.1

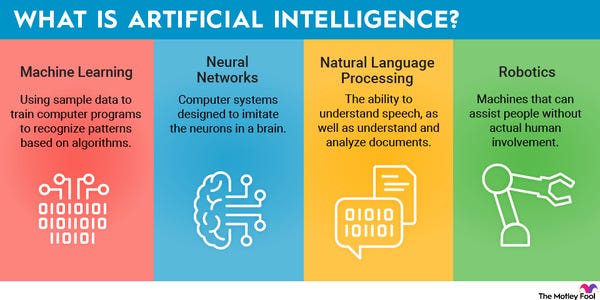

Maybe I am slow on the uptake here, but it seems that the U.S. government is seeking to utilize AI much more than what might be wise. Don’t get me wrong, I am not saying we should ban AI or calling AI the devil. AI is a tool and like any tool it can be used for good and used for less than good purposes. It also can be mismanaged and misunderstood.

I wrote about the Make America Healthy Again (MAHA) Report that the HHS department sent out last week that was filled with errors and ultimately was proven to have been largely written by AI. It included errors like citing publications that didn’t exist. This is not good.

Is this unplagiarism? I don’t know.

Anyway, this should have been known as anyone who has received training over AI could tell you. MIT released a report in 2023 that mentioned this little issue.2 Again, maybe it’s just me, but shouldn’t professionals using AI be aware of the downsides of AI as well as the upsides? Perhaps?

Here is what the report says about how AI may distort reality:

Unified and consistent governance are the rails on which AI can speed forward. Generative AI brings commercial and societal risks, including protecting commercially sensitive IP, copyright infringement, unreliable or unexplainable results, and toxic content. To innovate quickly without breaking things or getting ahead of regulatory changes, diligent CIOs must address the unique governance challenges of generative AI, investing in technology, processes, and institutional structures. (p. 5)

Notice, “unreliable and unexplainable results….” Like ghost publications. “Toxic content” like deciding one’s health care based on AI data, that may rely on those unreliable and unexplainable results. Unfettered access to AI with a reliable government would not be tolerable. Under this regime, it is terrifying.

So, consider that AI is an emerging technology, a vastly unknown technology that is quickly taking the world by storm. We see it in higher education, government, healthcare, etc. It has really taken hold of so many aspects of our lives already that it is difficult to keep up with. It is already being used to identify people’s faces so that ICE and other gestapo-type forces can deport those they deem illegal. And now the Big Butt-ugly Bill includes a rule that prohibits states from enforcing any law or regulation regulating AI. Does that seem like a good idea?

The article I mentioned at the beginning of this piece is titled, “State lawmakers push back on federal proposal to limit AI regulation.”3 The article states that 260 state legislators from ALL 50 states and across party lines sent a letter to Congress opposing the 10-year moratorium. Why would they do this?

The reason is simple. States have already begun the process of providing laws and regulations to keep AI from getting out of control. And now the Federal government, which is coming very late to the party, decides to put a 10-year moratorium on all the work the states have already done. Colorado, California, and Utah have already passed legislation regulating AI use and 15 other states have proposed such legislation. Putting these regulations on hold now means putting these regulations in limbo. For 10 years. That is a long time for a technology that changes every 24 hours. Or less. In 10 years, AI will be unrecognizable from what we have today.

The lawmakers argue that the decade-long moratorium would hinder their ability to protect residents from AI-related harms, such as deepfake scams, algorithmic discrimination and job displacement.4

States have begun placing regulations on AI because they saw the need for it and couldn’t afford to wait for the Feds to catch up.

We have evidence that the Federal government is already failing on this proposal. Marjorie Taylor-Greene, to her credit, admitted she had not read this section of the bill and voted in favor of it. Had she known about this section, she claims she would not have voted for it.

Rep. Marjorie Taylor-Greene, a far-right Republican from Georgia who has fanned conspiracy theories about everything from 9/11 to Pizzagate, posted on X Tuesday that she is “adamantly” opposed to the provision that would prevent states from enforcing AI laws, though she voted for in favor of the bill. She admitted that neither she nor her staff read the bill fully before she cast her vote.5

Furthermore, as I cited above, the MAHA report relied heavily on AI and was filled with errors and unreliable and unexplainable results. AI is being heavily pushed by Elon Muskrat as well. To be fair, a year ago, he was saying that AI represented an existential threat to humanity and that it would take all of our jobs.6 But now, he sings a different tune.7 Why do you suppose that might be? Maybe he profits from it…just maybe.

Here is where I think this proposal in the bill comes from: It is another example of the excessive use of unitary executive power that the regime is seeking to exert over our nation and our government. Why should the federal government be so concerned that the states might have regulations in place that differ from one another? Isn’t that the way with many of our laws and regulations? Of course it is. But the push from this regime is to grab power away from institutions of higher education, departments of the federal government, and now, the states.

We can’t take our eyes off these snakes for a second.

Thanks for reading.

Knowledge is power.

Greg

Committee on Energy and Commerce. US House of Representatives. (2025, May 11). https://docs.house.gov/meetings/IF/IF00/20250513/118261/HMKP-119-IF00-20250513-SD003.pdf

How generative AI will reshape the Enterprise. Databricks. (n.d.). https://www.databricks.com/resources/ebook/mit-cio-generative-ai-report

Fox-Sowell, S. (2025, June 3). State lawmakers push back on federal proposal to limit AI regulation. StateScoop. https://statescoop.com/state-lawmakers-push-back-federal-proposal-limit-ai-regulation/

Ibid. paragraph 2.

Ibid. paragraph 12

Mesbahi, F. (2024, August 15). Elon Musk’s WARNING Leaves Audience SPEECHLESS . YouTube.

Salvaggio, E. (2025, June 4). Musk, AI, and the weaponization of “administrative error.” Tech Policy Press. https://www.techpolicy.press/musk-ai-and-the-weaponization-of-administrative-error/

Yeah, the Big Butt-Ugly Bill has lots of opponents now. The increase to the deficit, the little "gotcha" clauses that do not belong in a budget bill, and this AI thing is one of those. I'm thankful for our democracy where it's normally a no-pass filter for bad ideas. Normally, if anything doesn't appeal to most of us, it can't become law or be enforced due to our "checks and balances" in our system. Now, the wheels seem to be off the bus and there is great danger in that.

I’m scared to death about AI…